Sources: | Date: 2019-08-03 10:48:09 | Browse:167804

A few days ago, Tesla CEO Elon Musk bombarded the technical limitations of lidar as a sensor for self-driving cars on the company’s self-driving open day: “This is an extremely expensive and useless technology. "As soon as the remarks came out,…

A few days ago, Tesla CEO Elon Musk bombarded the technical limitations of lidar as a sensor for self-driving cars on the company’s self-driving open day: “This is an extremely expensive and useless technology. "As soon as the remarks came out, the industry was in an uproar. The status of the three autonomous driving sensors of millimeter wave radar, vision system (camera), and lidar should be rewritten? It is reported that researchers from the University of Shanghai for Science and Technology have recently developed a laser-based The radar's new perception system can shoot objects 45 kilometers (28 miles) away in a smoky urban environment. This technology uses a single photon detector, combined with a unique algorithm, to "weave" the sparsest data points together to generate ultra-high resolution images. The new lidar vision technology has significantly improved the limit capability of diffraction, and is expected to open up new areas for high-resolution, fast, and low-power 3D optical imaging over long distances.

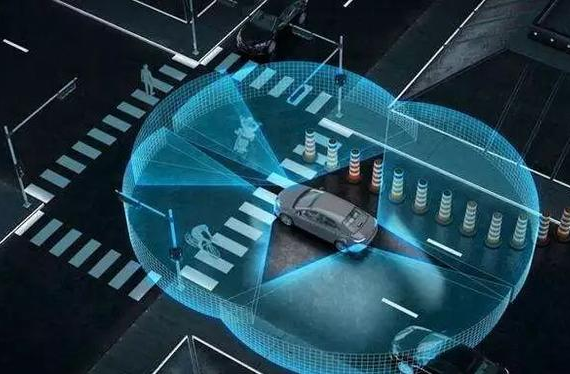

Autonomous driving requires multi-sensor fusion applications

The safe driving of a self-driving car requires three necessary and critical steps: the first is the perception phase, that is, the acquisition and collection of information about the external environment and the vehicle's own operating conditions; then, it enters the decision-making phase and issues driving instructions, mainly relying on Cloud algorithms for various control chips; the final stage is the execution stage, which "puts into action" the vehicle signal that the front-end decision is completed. It can be seen that the perception stage is the initial guarantee for the safe driving of autonomous vehicles, just like the "eyes and ears" of a car.

Due to the complex and changeable road environment and vehicle driving conditions, a single-function sensor cannot meet the real-time change of vehicle information during driving. Yi Jihui, vice president of global marketing and application engineering of ON Semiconductor's Intelligent Perception Division, said that sensor fusion (including vision systems, millimeter wave radar and lidar) and sensor depth perception are the future development trends of autonomous vehicle perception systems.

The vision system (camera) can perform various recognition operations through rich imaging information, such as lanes, pedestrians and objects, as well as parking lot bars and traffic signs, but it is not sufficiently adaptable in terms of long-distance imaging and speed measurement. Millimeter-wave radar is to determine the distance, speed and angle of obstacles by emitting electromagnetic waves in the millimeter range, and avoid the influence of harsh environments such as rain, snow, dust, and fog. Due to the limitation of wavelength and size, millimeter-wave radar has low resolution and is not very sensitive to stationary objects and moving non-metal objects. The detection accuracy of lidar is the highest among the three, reaching millimeter level. It has the ranging ability of radar, the detection accuracy and resolution are higher than that of radar, and it has the sensing ability of a camera, and it is not restricted by light conditions. The disadvantage is that the cost is high and it is easily affected by extreme weather and smoke and dust.

Can lidar be replaced?

近Over the years, lidar has become the focus of controversy in the industry. Due to its high precision, high resolution and high stability, almost all autonomous vehicle manufacturers have been unable to avoid lidar in the past few years, which makes lidar almost monopolize the sensor market, and device prices remain high. Therefore, some companies choose to reduce the cost of lidar to achieve profitability. The first thing Google Autopilot Company Waymo did after its independence was to work hard to reduce the cost of lidar by 90% from the industry standard US$75,000. However, blindly reducing costs will also bring many problems. It is understood that in order to reduce the price of the whole vehicle, some companies have adopted cheaper solid-state lidar, which has brought new problems. The radar cannot achieve 360-degree rotation, and it is difficult to detect the situation behind it. It is necessary to introduce new sensors to assist safe driving, which is not economically feasible.

In order to reduce the cost of lidar, some auto companies represented by Tesla simply blacklisted lidar and developed other technical routes. The reporter learned from Tesla's official website that a Tesla has 8 cameras, 1 77GHz millimeter wave radar and 12 ultrasonic radars as standard. Lu Wenliang, general manager of CCID Consulting Automotive Industry Research Center, said that although the lidar is not seen in the configuration list of Tesla cars, Tesla will work hard to make other sensors better, and at the same time, it must cooperate with more powerful computing power. The back-end sensor chip and processing chip.

It can be seen that it is not accidental that Musk's "lidar fool theory" appeared at this time node. The sensor has indeed come to rethink its direction. Jin Ye, general manager of the automotive radar division of Beijing Institute of Technology Leike Electronic Information Technology Co., Ltd., said in an interview with a reporter from "China Electronics News" that it will take time to reduce the cost of lidar. In research institutions, lidar will still be the main environmental sensing sensor in the short term. However, it will become a trend for companies to avoid lidar and choose other technical routes for business purposes.

Lu Wenliang said that there are three ways for future autonomous vehicle sensors: one is to strengthen the performance of the sensor (vision system, millimeter wave radar, lidar, etc.), and to reduce the computing power requirements of the decision-making end; the other is to use an external network Realize the overall control of autonomous driving. In the future, 5G networks will provide a stronger technical foundation for the Internet of Vehicles, but it will be difficult to achieve in a short time; the third is to completely avoid lidar and integrate other sensors such as cameras, millimeter wave and ultrasonic radars. Application, the lack of detection accuracy of front-end sensors and other problems will be compensated by chips with stronger computing power at the decision-making end.

Jin Ye said that if the cost of lidar can be reduced to below the order of 1,000 yuan in the future, the possibility of it becoming the core sensor of unmanned driving will greatly increase. However, reducing the cost of lidar has become the biggest challenge. Tesla seems to have completely abandoned the use of lidar, trying to collect data through a multi-camera solution, and then use a simulator that restores the actual environment to train the neural network, and realize the vehicle's "cognition" of the traffic and road conditions through intelligent vision, only the degree of reliability It is difficult to draw conclusions.

Improving sensor performance is the only way to develop autonomous driving

No matter which path the autonomous driving sensor technology takes, improving the performance of vision systems, millimeter wave radars, lidars and other devices is a "basic skill." First of all, the advanced and intelligent vision system is the core component to ensure the safe driving of autonomous vehicles, and it is particularly important to improve the reliability of the vision system. Yi Jihui said that autonomous driving places higher requirements on the intelligence (pixels, understanding and judgment capabilities) of visual sensors.

Tesla is proud of its self-driving mechanism, largely because the visual devices play well. It is understood that its vision devices have a total of 8 fisheye, normal and telephoto cameras: including a reversing camera applied to the rear of the car, a front trinocular camera, and two side-view cameras on each side, side-view and side-view cameras. The overlapping of the rearviews can avoid visual blind spots, and basically guarantee Tesla's L3 level of lane changing, merging, and high-speed functions.

Guo Yuansheng, deputy director of the Central Science and Technology Committee of the Jiu San Society and vice chairman of the China Sensor and Internet of Things Industry Alliance, said that there is still a lot of room for improvement in the dynamic range and near-infrared sensitivity of the camera. Bicycles need more cameras with better performance. . Jin Ye said that the multi-eye stereo camera is the development trend of the sensor vision system in the future. It is expected to integrate the advantages of lidar and camera. It not only has the characteristics of lidar's high-density distance point cloud to directly extract obstacles and accurately measure distances, but also The ability of visual recognition and machine learning.

Secondly, more signal processing algorithms and new radar technologies will be transplanted to the development of millimeter wave radars to provide a more sensitive perception system for detecting obstacles. Guo Yuansheng said that in recent years, the millimeter-wave radar module used in automobiles contains multiple chips based on different processes, which is a relatively awkward system and high cost. In order to pursue smaller size and lower cost, countries are working on the development of multi-functional component integration and integration, standardized and universal radar chipsets.

A few days ago, Tesla CEO Elon Musk bombarded the technical limitations of lidar as a sensor for self-driving cars on the company’s self-dri…

The weighing module industry market survey report is to use scientific methods to purposefully and systematically collect, record and organiz…

In the current technology industry, a variety of electronic systems and mechanical systems will use various weighing devices, and the most im…

At present, and even in the next few decades, sensors are listed as the top 10 technology products that affect and change the world economy a…

At present, load cells have already penetrated into a wide range of fields such as industrial production, space development, ocean exploratio…

In the combined strain pressure sensor, elastic sensitive elements can be divided into sensing elements and elastic strain elements. The sens…